20 KiB

Actors

-

Maintainers

The group maintaining this repository. We are creating the deployment workflows and service configurations, and curate changes proposed by contributing developers.

-

Developers

People with the technical background to engage with our work, and may contribute back, build on top of, remix, or feel inspired by our work to create something better.

-

Hosting provider

They provide and maintain the physical infrastructure, and run the software in this repository, through which operators interact with their deployments. Hosting providers are technical administrators for these deployments, ensuring availability and appropriate performance.

We target small- to medium-scale hosting providers with 20+ physical machines.

-

Operator

They select the applications they want to run. They don't need to own hardware or deal with operations. Operators administer their applications in a non-technical fashion, e.g. as moderators. They pay the hosting provider for registering a domain name, maintaining physical resources, and monitoring deployments.

-

User

They are individuals using applications run by the operators, and e.g. post content.

Glossary

-

A collection of social networking applications that can communicate with each other using a common protocol.

-

Application

User-facing software (e.g. from Fediverse) configured by operators and used by users.

-

Configuration

A collection of settings for a piece of software.

Example: Configurations are deployed to VMs.

-

Provision

Make a resource, such as a virtual machine, available for use.

-

Deploy

Put software onto computers. The software includes technical configuration that links software components.

-

Migrate

Move service configurations and deployment (including user data) from one hosting provider to another.

-

Resource

A resource for NixOps4 is any external entity that can be declared with NixOps4 expressions and manipulated with NixOps4, such as a virtual machine, an active NixOS configuration, a DNS entry, or customer database.

-

Resource provider

A resource provider for NixOps4 is an executable that communicates between a resource and NixOps4 using a standardised protocol, allowing CRUD operations on the resources to be performed by NixOps4. Refer to the NixOps4 manual for details.

Example: We need a resource provider for obtaining deployment secrets from a database.

-

Runtime backend

A type of digital environment one can run operating systems such as NixOS on, e.g. bare-metal, a hypervisor, or a container runtime.

-

Runtime environment

The thing a deployment runs on, an interface against which the deployment is working. See runtime backend.

-

Runtime config

Configuration logic specific to a runtime backend, e.g. how to deploy, how to access object storage.

Technologies used

NixOS

NixOS is a Linux distribution with a vibrant, reproducible and security-conscious ecosystem. As such, we see NixOS as the only viable way to reliably create a reproducible outcome for all the work we create.

Considered alternatives include:

- containers: do not by themselves offer the needed reproducibility

npins

Npins is a dependency pinning tool for Nix which leaves recursive dependencies explicit, keeping the consumer in control.

Considered alternatives include:

- Flakes: defaults to implicitly following recursive dependencies, leaving control with the publisher.

SelfHostBlocks

SelfHostBlocks offers Nix module contracts to decouple application configuration from implementation details, empowering user choice by providing sane defaults yet a unified interface. Offered contracts include back-ups, reverse proxies, single sign-on and LDAP. In addition, we have been in contact with its creator.

Considered alternatives include:

- nixpkgs-provided NixOS service modules: support far more applications, but tightly coupled with service providers, whereas we expect them to sooner or later follow suit.

- NixOS service modules curated from scratch: would support any setup imaginable, but does not seem to align as well with our research-oriented goals.

OpenTofu

OpenTofu is the leading open-source framework for infrastructure-as-code. This has led it to offer a vibrant ecosystem of 'provider' plugins integrating various programs and services. As such, it can facilitate automated deployment pipelines, including with — relevant to our project — hypervisors and DNS programs.

Considered alternatives include:

- Terraform: not open-source

Proxmox

Proxmox is a hypervisor, allowing us to create VMs for our applications while adhering to our goal of preventing lock-in. In addition, it has been packaged for Nix as well, simplifying our requirements to users setting up our software.

Considered alternatives include:

- OpenNebula: seemed less mature

Garage

Garage is a distributed object storage service. For compatibility with existing clients, it reuses the protocol of Amazon S3.

Considered alternatives include:

- file storage: less centralized for backups

PostgreSQL

PostgreSQL is a relational database. It is used by most of our applications.

Considered alternatives include:

- Sqlite: default option for development in many applications, but less optimized for performance, and less centralized for backups

Valkey

Valkey is a key-value store. It is an open-source fork of Redis.

Considered alternatives include:

- Redis: not open-source

OpenSearch

OpenSearch offers full-text search, and is used for this in many applications. It is an open-source fork of ElasticSearch.

Considered alternatives include:

- ElasticSearch: not open-source

OctoDNS

OctoDNS is a DNS server that may be configured using the Nix-native NixOS-DNS.

Considered alternatives include:

- PowerDNS: offers a front-end option, but less geared toward the use-case of configuring by Nix

Authelia

Authelia is a single sign-on provider that integrates with LDAP.

Considered alternatives include:

- KaniDM: does not do proper LDAP

- Authentik: larger package with focus on many things we do not need

- Keycloak: larger package with focus on many things we do not need

lldap

Lldap is a light LDAP server, allowing to centralize user roles across applications.

Considered alternatives include:

- 389 DS: older larger package

- FreeIPA: wrapper around 389 DS

Attic

Attic is a multi-tenant Nix cache featuring recency-based garbage collection written in Rust.

Considered alternatives include:

- cache-server: distributed cache written in Python that seems more of a research project than an actively maintained repository.

Architecture

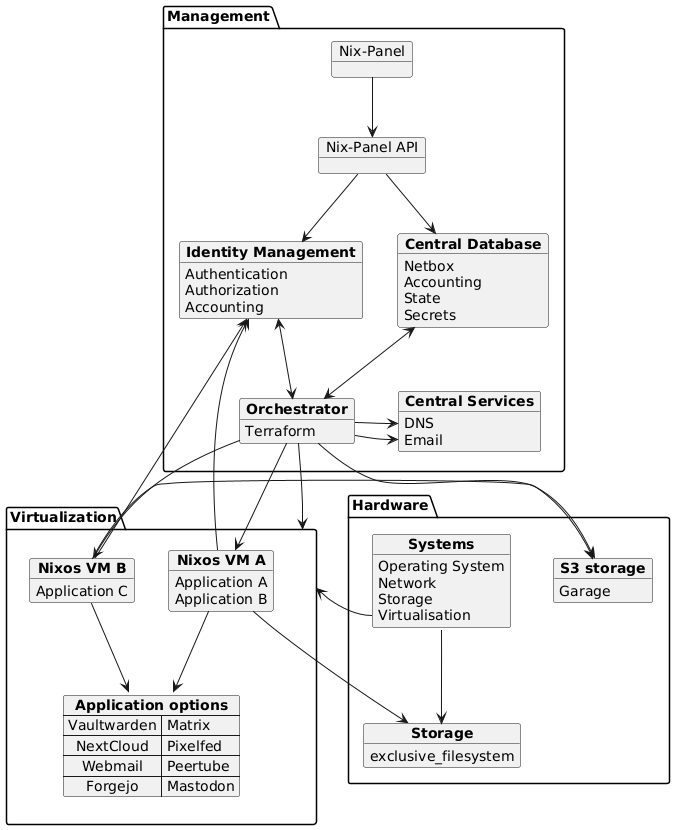

At the core of Fediversity lies a NixOS configuration module for a set of selected applications. We will support using it with different run-time environments, such as a single NixOS machine or a ProxmoX hypervisor. Depending on the targeted run-time environment, deployment will further involve OpenTofu as an orchestrator. We further provide a reference front-end to configure applications. To ensure reproducibility, we also offer Nix packaging for our software.

To reach our goals, we aim to implement the following interactions between actors (depicted with rounded corners) and system components (see the glossary, depicted with rectangles).

Service portability

The process of migrating one's applications to a different host encompasses:

- domain registration: involves a (manual) update of DNS records at the registrar

- deployed applications: using the reproducible configuration module

- application data:

- back-up/restore scripts using SelfHostBlocks

- application-specific migration scripts, to e.g. reconfigure of connections/URLs

Data model

Whereas the bulk of our configuration logic is covered in the configuration schema, our reference front-end application does in fact store data. The design for its data model to support the desired functionality is as follows, using the crow's foot notation to denote cardinality:

Host architecture

Whereas the core abstraction in Fediversity is a NixOS configuration module, a more full-fledged example architecture of the web host use-case we aim to support as part of our exploitation would be as follows, where VMs in question run Fediversity to offer our selected applications:

Break-down of project milestones

Whereas details of the implementation may need to be decided as the technical challenges involved become clear, we can already give a higher-level planning of relevant milestones and some of their salient features:

- implement a way to run online services emphasising user autonomy and data portability

- disseminate our results by engaging the open-source community to further expand on work in this direction

- automated dev-ops workflows

- Backups for Forgejo

- use dedicated Nix builder

- initial focus on single application for development

- unify versioning

- Automated dependency updates

- infrastructure automatically deployed using continuous deployment

- Full integration test

- CI rejects failing deployments

- code reviewers can suggest changes

- fediversity apps reused in infra

- derive users and their keys from the keys directory

- Nix package overlays upstreamed

- Separate test environments for staging vs. production

- support password-protected personal SSH keys for deploying services in development

- Write all modules with destructured arguments

- ephemeral state is automatically provisioned

- external developers empowered to contribute

- code reviewers can suggest changes

- Reproducible proxmox installation

- Continuous Integration builds available in a public cache

- docs: document having to load nix dev shell for pre-commit hook

- Update documentation on services

- knowledge base

- Document the semantics of our various domains

- Describe the hardware infrastructure needed to run Fediversity yourself

- reproducible project infrastructure

- NixOS configuration as the core abstraction

- fediversity apps reused in infra

- Generate documentation on the deployments from the code

- Write all modules with destructured arguments

- module upstreamed to nixpkgs

- panel bundled into Fediversity configuration

- automated dev-ops workflows

- exploit our work by enabling reproducible deployments of an initial set of portable applications

- applications deployed on command

- kick-started initial feedback cycle

- ProxmoX back-end supports multiple users

- Proxmox resources are provisioned to deploy services to

- Users can configure their desired sub-domains in the online panel, so that the deployed services are assigned the desired sub-domains

- provision admin accounts for deployed services

- users can update their deployment configurations

- use immutable buckets from VMs

- Databases are provisioned so that services can use a central storage

- VMs use central file storage

- reproduce DNS VM

- SMTP service is provisioned so that applications can send emails

- fediversity apps reused in infra

- ephemeral state is automatically provisioned

- panel staging/production configuration

- code passes security check

- brought into production

- Have a DNS service running to allow users to tie services to their own domain

- garbage collection of unallocated resources

- Relevant email accounts are provisioned such that the operator may be contacted

- reference front-end is decoupled from version of configuration module

- specification published

- REST API available

- Hosting providers can update their operators' deployments

- code passes security check

- nix-less bootstrap

- key features improving user experience supported

- upstream configuration options exposed

- allow disabling service while retaining data

- enqueuing deployment syncs

- user can have multiple deployments

- ProxmoX deployment allows scaling resources assigned to a VM

- View difference between configured and deployed state

- visualise schema changes

- aid needed actions on schema update

- single sign-on (SSO) for services

- delegating user management

- pooling instances to shared VMs

- on migration, allow reconfiguring monolithic vs distributed

- connecting an existing identity management service